WASHINGTON -- For all the challenges facing pre-election polls -- and there are many -- the average accuracy of statewide surveys last year matched their performance in the last two off-year elections, according to a report released last week by the National Council on Public Polls (NCPP).

First established in 1969, NCPP includes many of the pollsters that conduct surveys for national media organizations. They have released annual reports on poll accuracy since 1997, focusing mostly on national surveys. They have produced reports on statewide poll accuracy since 2002.

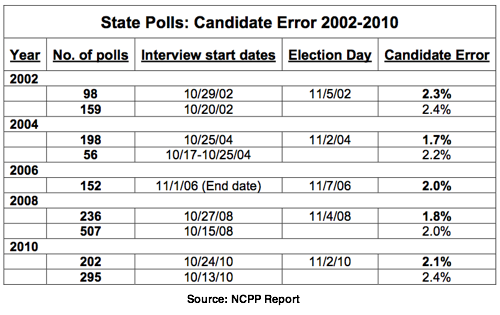

NCPP's 2010 report considers 295 surveys conducted in the 20 days leading up to the election in races for Senate and Governor (HuffPost Pollster provided NCPP with poll data used in its analysis). As in past years, NCPP also tabulated accuracy scores for a smaller number of polls (202) conducted by each organization within the final week of the campaign. For each poll, they compared the margin separating the top two candidates in the vote count to the margin forecast by the poll. They divide these scores by two, NCPP explains, in order to allow comparisons between their error "candidate error" statistic and each poll's sampling margin of error.

In 2010, they found an average candidate error in 2010 of 2.4 percentage points for all of the polls examined, slightly more than in the presidential elections of 2008 (2.0%) and 2004 (2.2%), but the same as in 2002 (2.4%), the last off-year election for which NCPP scored polls more than a week before Election Day.

Some pollsters argue that the lower turnouts of off-year elections complicate their efforts to identify "likely voters" and lead to less accuracy. The NCPP data support that theory, particularly when looking at the final poll conducted by each organization. The final poll candidate errors were slightly smaller in 2004 (1.7%) and 2008 (1.8%) than in 2002 (2.3%), 2006 (2.0%), and the final-poll error for last year (2.1%) fell within the range of the last two off-year-elections.

Those average statistics, however, may mask a growing number of polls producing results outside their reported margin of error. This past year, NCPP reports, one in four final week polls (25%) "had results that fell outside the sampling margin of error of that survey." That compares to just 11% in 2006 and 16% in 2002.

NCPP's reports also help demonstrate the continuing trend away from live interviewer polls and toward automated telephone and internet panel surveys. This year, NCPP found that less than half (46%) of the 295 polls they examined were conducted by telephone using live interviewers, while a majority were conducted using an automated telephone methodology (41%), a combination of live and automated telephone methods (2%) or over the internet (9%).

In contrast, NCPP's report eight years ago made no mention of either automated or internet surveys, and NCPP President Evans Witt confirms that the 2002 report did not include results from automated or internet polls. Automated pollsters did release results in 2002, but they were a far smaller share of public polls. A report on statewide polls that year by political scientist Joel Bloom and Jennie Pearson found automated surveys were just 17% of all the surveys they considered.

Despite the growth, NCPP found that in 2010 the mode of interview alone made little difference to horse-race accuracy. The average error on live interviewer surveys was 2.4%, slightly higher (2.6%) for automated surveys and slightly lower (1.7%) for the one firm (YouGov/Polimetrix) that conducted and reported results gathered over the internet. The lack of an overall difference between automated and live-interviewer methods is consistent with similar analyses of polling conducted in prior elections. The error for individual pollsters may differ, however, as Nate Silver found in a post-election analysis of 2010 polling.

These error calculations have some important limitations. They do not address the accuracy of national surveys that measured the "generic" vote preference for races for the U.S. House, many of which overstated the Republican margin when sampling only landline phones. The NCPP calculations also do not include polls at the Congressional district level, which often produced far greater errors than the statewide polls. Finally, as NCPP emphasizes, horse-race accuracy may not be the best measure of survey quality: "We strongly believe a poll's performance should be based on its overall reporting about the issues and the dynamics of a political campaign," they write, "and not one number."

Still, despite some high-profile misfires, doubts expressed by pollsters themselves and an eroding ability to reach and interview willing respondents, the overall accuracy of campaign polling remains surprisingly strong.